Observing the

LOCC at the application level, it seems to imply that the project grew in

complexity over the first 4 versions and then was refactored and cleaned up in

the last version. This is encouraging and should be noted as good practice for

future iterations.

The method

count metric did not coincide with the refactoring approach described in the

LOCC analysis. If refactoring did truly occur, one would expect to see the

method count climb proportional to the amount of code that was refactored. The

implication of this data shows that in fact the code was not really refactored,

but indeed made less complex overall to achieve the lower line count in the last

version.

The class

count metric goes on to prove that significant re-architecting was completed in

the last version or that significant functionality was actually pulled out from

the entire application.

Further

analysis shows that the services and gui packages were fairly stable from

version 3 onwards. They both only include minimal changes which seem to have

added lines of code, methods, and classes. The most significant contributor to

these metrics seems to have been the app package. The app package seems to have

shrunk in line, method, and class count. In retrospect, most of that package was

inherited from some sample open source code. Since we brought in most of the

open source code and stripped out what was unncessary in the latest revision, it

shows through the metrics that we have cleaned up and reduced the total lines,

methods, and classes.

We can

use the knowledge learned about how metrics are collected to make notes of

significant changes between revisions (such as the one listed in the conclusion

above) so that we can discount such major changes in finding useful information

about refactoring and clean up to show that we are likely to have readable and

modular code. Showing the relative numbers for method and line counts can also

help show the likeliness of bugs and other artifacts that our code may carry

based on the analysis.

Metrics Used From Martin: (no longer functions with update jxta build)

Analyzing

the results of the Martin Metrics produced interesting and useful insight into

the design of our system. The

results allowed us to target areas that should be analyzed and refactored in

order to improve the reusability of our classes and make them less sensitive to

change.

Afferent

and Efferent Coupling alone are not very useful as an analytical metric.

The metric for Efferent Coupling was much higher than the metric for

Afferent Coupling. This is not

unusual for a system developed in Java because the classes that the developer

creates often depend heavily on classes that are built into the Java language.

Where Afferent and Efferent Coupling become more powerful metrics is in

their ability to calculate the Instability metric.

The three packages analyzed (app, gui, services) have Instability values

of .962, .973, and .636. These

metric values show that the classes in the three packages are not used by a

large number of other packages, however they do depend on a number of other

packages. The three packages can be

easily changed because few others depend on them.

App,

gui, and services packages all had the value of 0 for the Abstractness metric.

This means that the classes in the three packages cannot be extended.

This is not necessarily a negative attribute of a package. In order to analyze the effect of a 0 abstractness value we

must move on to the Distance from the Main Sequence metric.

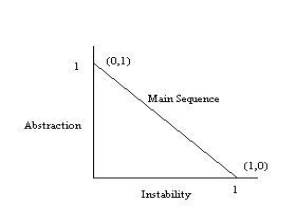

The

idea behind the Distance from the Main Sequence metric is that the more abstract

a package is the more stable it should be.

So it is ideal for a package to have a either A=0 and I=1, or for it to

have A=1 or I=0. Actually, a good

package will have a balance between abstractness and instability. In other words

if placed as a point on the graph below the values of A and I should fall on or

close to the Main Sequence line.

Packages

should have a normalized value for the Distance from the Main Sequence metric of

near zero. A value close to zero

represents that the package is close to the Main Sequence. This type of package is more reusable, and less sensitive to

change. The app and gui packages in

our system had normalized Distance values of -.038 and -.027 respectively, which

is good. However, the normalized

Distance value for the services package deviated further from zero to a value of

-.364. The classes in the

services package should be examined and refactored.

A greater number of classes in other packages depend on the classes in

services, in comparison to the dependence on the classes in app and gui.

When refactoring services the developers need to create more abstract

classes or reduce the amount of outside dependencies on its classes.

Metrics

Used From RevJava:

There

are a number of classes that show a high level of inheritance.

At a depth of 6, the gui classes still ok considering that java gui usage

typically results in high inheritance depth. The average method for our code came out to 27.

Revjava discovered several large methods including discoverEvent at 147

lines inside of PeerDiscovery and method int inside of each GUI related screens.

Other notable results include a high occurrence of critics about breaking

the Law of Demeter but mostly when classes are working with the user interface.

It is also obvious from the metrics on which methods are yet to be

implemented by the fact that they are marked as unused.

It is possible that some of these unused methods should in fact be

removed. Out of three

packages for the system, the services package has a total method size of 257,

apps package has a method size of 476, while the gui method size is a whopping

2384. Apparently much of the current functionality is contained

within the gui package. The gui

should be separated from the code logic and therefore the apps and services

packages may need to contain some of the functionality found within the gui

package. Upon further inspection,

the gui package contains 20 classes while the apps and services contain a mere 6

classes. The 20 classes represent

20 different screens in the system. Each

class in the gui package averages 2.7 methods per class so itís understandable

why the gui package is large relative to the rest of the system. From

this, we can tell that the gui package is ahead of the rest of the system.

More effort should be placed on implementing features represented with

the gui package to the user.

RMS

and JMT Analysis

The majority of methods in our application have low cyclomatic complexities, but two methods stand out: StatusScreen.updatePeerVector in the GUI package and PeerDiscovery.discoveryEvent in the Services package. These methods have cyclomatic complexity twelve and eleven respectively, and should be examined to see if such complexity is truly necessary. If these methods cannot be simplified, we must be sure to focus increased testing efforts on them.

Comparison of the Public Interface Methods and Methods per Class metrics revealed that in the GUI package, all constructors have package visibility, while in the other packages, the constructors have public visibility. We should determine whether this difference is by design or if there is an inconsistency in the application that should be remedied.

The comparison of the Public Interface Methods and Methods per Class metrics also reveals that there are very few private methods in the application. It is possible that the complexity of our methods, particularly the two mentioned in 6.1 above, could be reduced by adding private helper methods to the classes.

In some cases, we ran different tools searching for the same metric so that we could gain confidence in our results. We discovered that JMT and RSM produced parallel but separate results sets when counting the number of methods in each class. We determined that this is due to a difference in the tools ability to parse inner classes; while RMS excludes the methods of inner classes in its count for a class, JMT includes them. We think that both methods are valid, and enjoy having both, because a large difference might indicate a class with an overly complex set of inner classes.